This post was imported from my old Drupal blog. To see the full thing, including comments, it's best to visit the Internet Archive.

Update 2009-11-08: The developers of the Provenance Vocabulary tell me that the pattern I used below isn’t correct, and there doesn’t currently seem to be a method of describing what I want to describe using that vocabulary. But it’s still under development, so hopefully it will become usable soon.

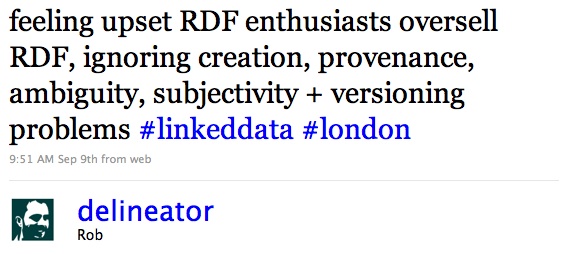

One of my favourite tweets from Rob McKinnon (aka @delineator) is this one:

because it’s one of the things that bugs me on occasion too, and because the issues he mentions are so vitally important when we’re talking about public sector information but (because they’re the hard issues) are easy to de-prioritise in the rush to make data available.

Let’s go back to basics: How do you know whether you can trust a piece of information? Think of an infographic in your daily newspaper showing the results of a survey. You could just decide based on your trust in the newspaper itself. But if you’re feeling suspicious, there are any number of things that you need to trust:

- the designer who created the infographic, that they didn’t skew the graphic to make it imply something that the data doesn’t warrant

- the data munger who cleaned up the data and supplied it to the designer, that they didn’t introduce errors into the data while cleaning it up

- the organisation who published the data, that they aggregated it accurately and published all the results

- the organisation who conducted the survey, that they surveyed a large enough and representative enough collection of people and collated the results accurately

- the people who responded to the survey, that they didn’t lie

What enables us to determine how much to trust the results of each of these steps? Well, if the organisation who conducted the survey published enough information such that anyone could replicate the survey if they wanted to, we’re more likely to trust their results. That’s at least partly because in order to publish sufficient information for someone to replicate the study, they have to go into the kind of detail that necessarily exposes biases the study might have, but also because they’re unlikely to be that open about what they did if they were trying to cover something up. That’s why reproducability is one of the fundamental principles in the scientific method.

That same principle applies at the data-processing end of the chain of processes that led to the infographic. We will trust the infographic more if we can get hold of

- the raw survey results (open data)

- details about all the programs that aggregated, cleaned up or otherwise transformed the data, including their source code (open source)

because with these details we could, if we chose, replicate the resulting data and create our own visualisation of it.

The question is, given some RDF, how do we provide enough detail about how it was generated to enable others to work out whether to trust it or not?

The answer should come as no surprise: “With RDF!”

I’ve been looking at a couple of vocabularies for recording provenance: the Open Provenance Model and the Provenance Vocabulary.

The Open Provenance Model is a general purpose model that can be expressed in RDF. It splits the world into three main things:

- Artifacts which are things that you might want to record the provenance of

- Processes which are things that happen to artifacts

- Agents who initiate processes

These three types of things interact with each other in three main ways:

- artifacts are generated by processes

- processes use artifacts

- processes are controlled by agents

and two subsidiary ones which occur as a result of these:

- artifacts are derived from other artifacts

- processes trigger other processes

So far so good. But then it starts getting complicated. A given process might use and generate several artifacts (for example, an XSLT transformation might use a source document and a stylesheet, and generate an index and a number of pages), so each of the three main relationships above is qualified through the use of a particular role.

Further, different artifacts might be used or created at different times in the process, so each of the relationships is also qualified with a timestamp. (The Open Provenance Model is built to describe processes that might take days, or even longer, with different bits of information coming into play at different times.)

And then to add one more twist, each provenance graph is just one possible account of the history of an item; in particular a different account might break down the processes into subprocesses, or aggregate the processes differently.

The complications of having timestamps on relationships, and having multiple accounts, means that describing provenance with the Open Provenance Model is a little tedious, especially when you’re mostly concerned about the provenance of data (as opposed to, say, a car).

The Provenance Vocabulary has the same basic types, which they call Artifacts, Executions and Actors, but the different roles that artifacts play are indicated through the properties prv:usedData, prv:usedGuideline and its sub-properties, and timestamps are associated directly with executions and artifacts. It’s also specifically oriented towards the kinds of operations that we typically need to do when transforming and publishing linked data.

To give you an idea about how it might work, here’s an illustration of how we might use the provenance vocabulary to describe the construction of some RDF from a CSV file:

Here are the prefix bindings. Note the reuse of the HTTP vocabulary, FOAF, Dublin Core and VoID.

@prefix xsd: <http://www.w3.org/2001/XMLSchema#> .

@prefix rdf: <http://www.w3.org/1999/02/22-rdf-syntax-ns#> .

@prefix rdfs: <http://www.w3.org/2000/01/rdf-schema#> .

@prefix prv: <http://purl.org/net/provenance/ns#> .

@prefix prvTypes: <http://purl.org/net/provenance/types#> .

@prefix http: <http://www.w3.org/2006/http#> .

@prefix foaf: <http://xmlns.com/foaf/0.1/> .

@prefix dct: <http://purl.org/dc/terms/> .

@prefix void: <http://rdfs.org/ns/void#> .

The provenance record itself and caches of relevant documents somewhere web accessible; a log subdirectory on my website seems as good a place as any.

@base <http://www.jenitennison.com/log/2009-10-24/> .

Now the meat of the provenance information. I’m defining a dataset identified as http://statistics.data.gov.uk/id/region, which is a dataset of Government Office Regions within the UK. The resources described by the dataset have URIs of the form http://statistics.data.gov.uk/id/region/{regionCode}, where regionCode is a single capital letter (assigned by ONS).

<http://statistics.data.gov.uk/id/region> a void:Dataset ;

dct:title "Government Office Regions" ;

foaf:homepage <http://statistics.data.gov.uk/doc/region> ;

void:exampleResource <http://statistics.data.gov.uk/id/region/H> ;

void:uriRegexPattern "http://statistics.data.gov.uk/id/region/[A-Z]" ;

void:subset _:GORRDF .

I’m not describing the provenance of this entire dataset here: the dataset of information about Government Office Regions will likely contain information from many different sources, created in many different ways at many different times. I’m just describing a particular subset of that information (identified here by a blank node with the ID _:GORRDF).

This dataset is captured as a dump in the web-accessible cache in which I’m keeping all the provenance-related information. Here we start seeing the provenance-related properties. The dataset was created by a prv:DataCreation event performed at 12:20 today by me. The creation used data from a CSV document that is also in the web-accessible cache, using the “guideline” (in this case an XSLT transformation) that is again in the web-accessible cache. I’ve also provided provenance information about that XSLT transformation (that it was created by me at 12:10 today; these times are made up, by the way! :)

_:GORRDF a void:Dataset ;

a prv:DataItem ;

prv:containedBy <cache/GOR_DEC_2008_EN_NC.rdf> ;

void:dataDump <cache/GOR_DEC_2008_EN_NC.rdf> ;

prv:createdBy [

a prv:DataCreation ;

prv:performedAt "2009-10-24T12:20:00Z"^^xsd:dateTime ;

prv:performedBy _:Jeni ;

prv:usedData [

a prv:DataItem ;

prv:containedBy <cache/GOR_DEC_2008_EN_NC.csv> ;

] ;

prv:usedGuideline [

a prv:DataItem ;

prv:containedBy <cache/region.xsl> ;

prv:createdBy [

a prv:DataCreation ;

prv:performedAt "2009-10-24T12:10:00Z"^^xsd:dateTime ;

prv:performedBy _:Jeni ;

] .

] ;

] .

Now we have some descriptions of those cached documents:

<cache/GOR_DEC_2008_EN_NC.rdf> a prv:Document ;

dct:format <http://www.iana.org/assignments/media-types/application/rdf+xml> ;

rdfs:label "GOR_DEC_2008_EN_NC.rdf" .

<cache/region.xsl> a prv:Document ;

dct:format <http://www.iana.org/assignments/media-types/application/xslt+xml> ;

rdfs:label "gor.xsl" .

<cache/GOR_DEC_2008_EN_NC.csv> a prv:Document ;

dct:format <http://www.iana.org/assignments/media-types/text/csv> ;

rdfs:label "GOR_DEC_2008_EN_NC.csv" ;

dct:isPartOf <cache/government-office-regions.zip> .

This last file – the CSV that contained the data – was part of a zip file. The zip file was retrieved via HTTP at 12:00 today through a GET request to the URI http://www.ons.gov.uk/about-statistics/geography/products/geog-products-area/names-codes/administrative/government-office-regions.zip, but I also make it available in the cache in case that original file either disappears or gets changed at a later date.

<cache/government-office-regions.zip>

a prv:Document ;

rdfs:label "government-office-regions.zip" ;

dct:format <http://www.iana.org/assignments/media-types/application/zip> ;

dct:hasPart <cache/GOR_DEC_2008_EN_NC.csv> ;

prv:retrievedBy [

a prvTypes:HTTPBasedDataAccess ;

prv:performedAt "2009-10-24T12:00:21Z"^^xsd:dateTime ;

prvTypes:exchangedHTTPMessage [

a http:GetRequest ;

http:requestURI "http://www.ons.gov.uk/about-statistics/geography/products/geog-products-area/names-codes/administrative/government-office-regions.zip"^^xsd:anyURI .

] ;

] .

The final bits and pieces provide extra information about the resources that have been referenced above, including the provenance of the file that is providing this provenance information!

_:Jeni a foaf:Person ;

foaf:name "Jeni Tennison" ;

foaf:homepage <http://www.jenitennison.com/> .

<http://www.jenitennison.com/log/> a void:Dataset ;

dct:title "Jeni's Activity Log" ;

foaf:homepage <http://www.jenitennison.com/log/> ;

void:uriRegexPattern "http://www.jenitennison.com/log/(.+)" ;

void:subset <> .

<> a void:Dataset ;

dct:title "Jeni's log for 19th October 2009" ;

foaf:homepage <> ;

void:exampleResource <log.ttl> ;

void:uriRegexPattern "http://www.jenitennison.com/log/2009-10-24/(.+)" ;

void:subset [

prv:containedBy <log.ttl> ;

void:dataDump <log.ttl> ;

prv:createdBy [

a prv:DataCreation ;

prv:performedAt "2009-10-24T18:57:00"^^xsd:dateTime ;

prv:performedBy _:Jeni

] ;

] .

<log.ttl> a prv:Document ;

dct:format <http://www.iana.org/assignments/media-types/text/turtle> .

<http://www.iana.org/assignments/media-types/application/xslt+xml>

rdf:value "application/xslt+xml" ;

rdfs:label "XSLT" .

<http://www.iana.org/assignments/media-types/application/rdf+xml>

rdf:value "application/rdf+xml" ;

rdfs:label "RDF/XML" .

<http://www.iana.org/assignments/media-types/text/turtle>

rdf:value "text/turtle" ;

rdfs:label "Turtle" .

<http://www.iana.org/assignments/media-types/application/zip>

rdf:value "application/zip" ;

rdfs:label "Zip" .

<http://www.iana.org/assignments/media-types/text/csv>

rdf:value "text/csv" ;

rdfs:label "CSV" .

This pattern for providing provenance information isn’t a complete answer because it doesn’t address how you might assess the provenance of a particular statement. If I went to http://statistics.data.gov.uk/id/region/H the only way I could establish that the rdfs:label (say) for the region was generated through the process described above would be to match the URI to the void:uriRegexPattern above, get hold of the original RDF from the cache and work out whether it contains the rdfs:label statement that I’m interested in.

I have a hunch that this would be more viable with named graphs: if statements with different provenance were actually placed in different graphs, then it would be possible with a SPARQL query to identify the graph(s) in which a statement was made, and their provenance. I think that the _:GORRDF blank node in the above could be a trix:Graph, for example.

Regardless, the Provenance Vocabulary that I’ve used above seems to do the job reasonably well. I’m intending to try this approach out on a few datasets and see how it stands up to real-world complexities. Comments and suggestions appreciated.